About

Hi! I am Jose, a Ph.D in Physics by the Universidad Autónoma of Madrid, Spain, since 2019. I am an expert in simulating electronic properties of materials. After finishing my PhD in Madrid, I worked as a Postdoctoral Research Associate in Bremen and Hamburg, as well as a Software Developer and Data Managener in the FAIRmat project, at the Humboldt University of Berlin. Now, I have a permanent position at the Bundesanstalt für Materialforschung und -prüfung (BAM) working as a Senior Data Manager.

I am passionate about Science and Software Development. You can find the details about the latest software developments I have been involved with in my GitHub profile. I have some projects that I have been involved with that you might find interesting:

nomad-coe/nomad: The main repository for the NOMAD data repository. From 2022 to 2024, I worked developing schemas and parsers for NOMAD. The main outcome of my time there was thenomad-simulationsschema, which is currently being very actively developed and used.BAMresearch/bam-masterdata: A set of tools and schema definitions for the Materials Science data model developed at BAM. I am currently working on this, with experts from a large variety of topics (from atomistic simulations to structural health monitoring).JosePizarro3/RAGxiv: A set of Python classes and functions to wrap existing implementation of LLM functionalities to extract structured metadata of arXiv papers. This work resulted in a separated Python package,JosePizarro3/pyrxiv, which uses the arXiv API to store the queried arXiv papers into HDF5 files for fast data retrieval.

Research

During my career, I focused on studying strong electronic correlation effects in real materials. These correlations are crucial for certain physical phenomena, like superconductivity and magnetism. They are also key in understanding how materials interact with external electromagnetic fields. This work relies on performing highly complex simulations.

As a Computational Materials Scientist, I am an expert in developing these complex methodologies, in analyzing these complex behaviors, and in using and writing highly optimized codes.

1. Research Data Management

Since 2022, I have been working as an expert in Research Data Management (RDM). RDM is a keys aspect of Science and Good Scientific Practices that is often overlooked. Since the last years and the development of powerful Artificial Intelligence (AI) and Machine-Learning (ML) methodologies it is obvious that following a good treatment of data is key for the future of Science, see e.g., Nobel Prize of Chemistry 2024, where a key aspect was the existance of protein databases which boosted the ML interatomic potential findings that gave rise to this Nobel Prize.

As an expert on RDM, my goal is to provide a service for the scientific community: I like to develop data models and ontologies, write down parsers and mappers for the generated data, and work on the software infrastructure behind these databases. In order to do that, I followed the F.A.I.R. principles, i.e., data has to be organized to be Findable, Accessible, Interoperable, and Reusable.

2. Strongly Correlated Materials

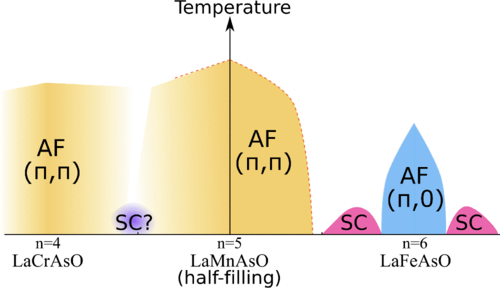

The main topic of my carrier has been to study and propose novel experiments for understanding the physics of strongly correlated materials. These systems show a plethora of effects such as the Mott (metal-to-insulator) transition and Hund metallicity. The electronic states of these materials are known to be intimately related with high-Tc superconductivity and magnetism. Specifically, I studied iron-based superconductors, magic-angle twisted bilayer graphene, and 2D materials.

Thus, understanding these states and proposing new materials with such properties using the capabilities of AI and ML is key to solve the long-standing problem of high-Tc superconductivity and to exploit their full potential in real world applications.

3. Methods and Software Development

Studying strongly correlated materials and running ML codes is computationally very demanding. In the case of strongly correlated materials, the paradigmatic model used is called the Hubbard model. This is a model that has been known for decades, extended, but whose exact solutions are impossible to obtain, so that often new approximations and methodologies, as well as optimized algorithms are developed to reach meaningful solutions.

Furthermore, this implies using several different tools to obtain solutions of the Hubbard model and managing workflows. A typical workflow will include: choosing the material and relaxing its crystal structure, performing Density Functional Theory (DFT) simulations for the ground-state properties, projecting and downfolding into a smaller sub-space (this step is normally done to make the original material problem more tractable), and finally, solving the Hubbard model by solving the interacting many-body quantum problem.

I worked on implementing new approximations, as the Slave-Spin Mean-Field (SSMF) method, and on optimizing the code defining new algorithms, see J.M. Pizarro, PhD Thesis: Electronic correlations in multiorbital systems, arXiv/1912.04141.

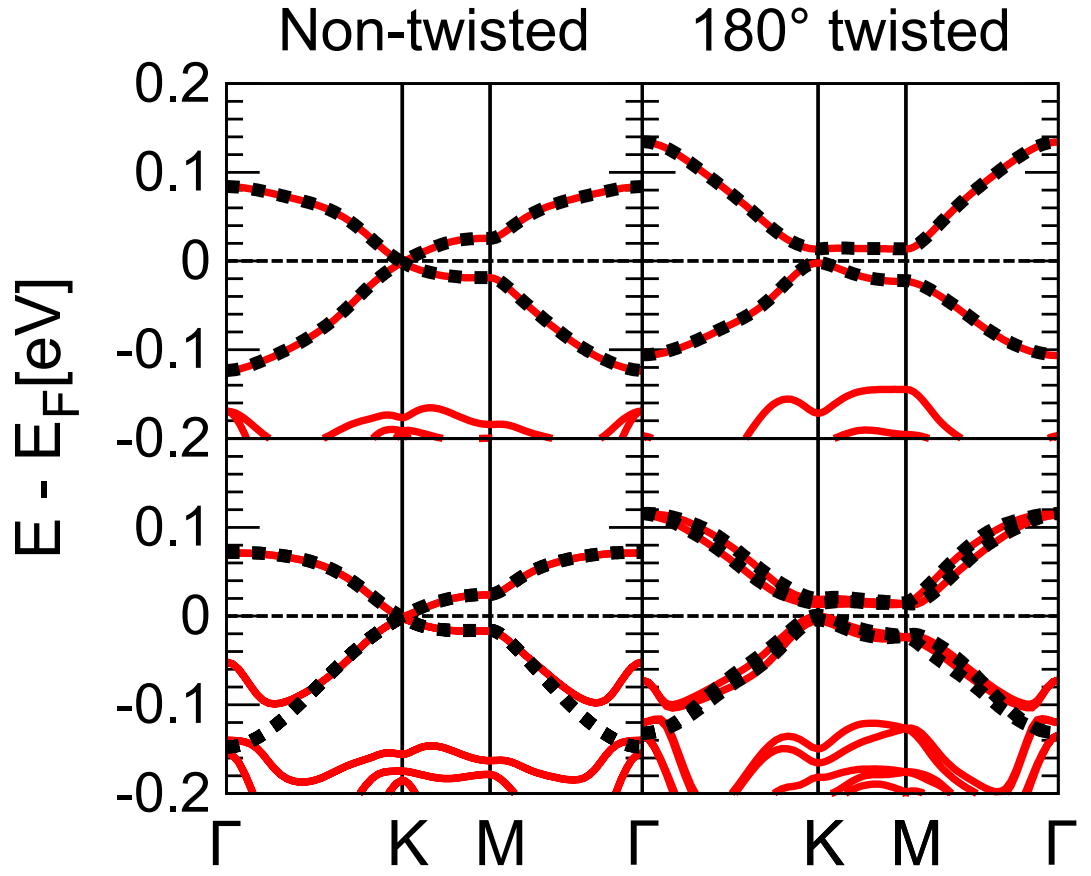

4. High-Throughput and Code Automation

An important aspect of strongly correlated materials is to define a smaller sub-space of bands and to solve the Hubbard model for these subset. However, this projection or downfolding is still a very human-involved problem, i.e., it needs a scientists deciding which bands to project to.

In the recent years, there has been a huge push for automation of both simulations and experiments in Materials Science. However, the aforementioned problem makes that still an expert in strong correlations is needed to study what is happening in these materials.

I am currently working on improving this procedure to make the study of strongly correlated materials more automated, or at least, with minimal human intervention.